Text Rendering

Text rendering took only a few days to get right. But it is soooooo right that I had to share

Rusttype

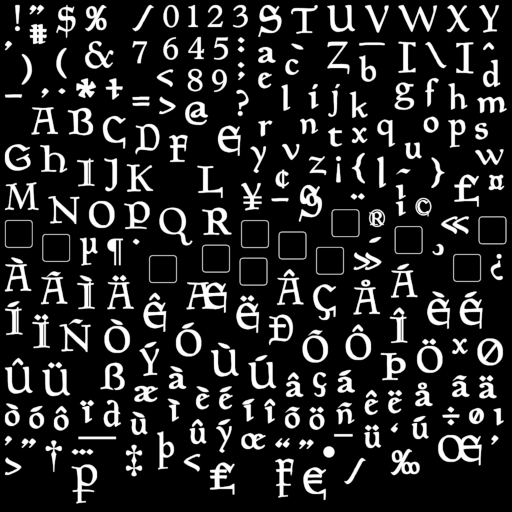

I could have used freetype, but there is a pure rust implementation of TTF rendering, so I used that to generate the original font atlas images. A font atlas renders every glyph, packed together into a texture, and stores information about where each character is in a table. Here is an example for the Planewalker font (the original was 8192x8192 for accurate distance-from-edge information. I shrunk this):

Signed Distance Field

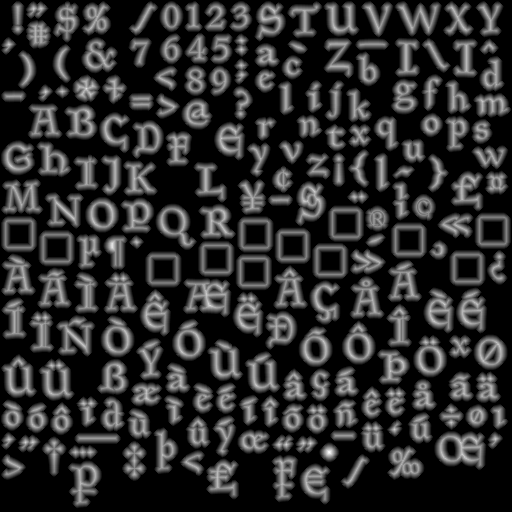

Valve software came up with the brilliant idea of using the color depth to store "distance from edge" information for fonts, providing much more precision about where the edge of a glyph was supposed to be. Since pixel shaders interpolate between the pixels of a texture at high speed, they can not only render very precise edges at any scale, but also do subpixel rendering while they are at it, and even do things like outlining! The term of this kind of font rendering is Signed Distance Field. It is much faster than true font rendering with Bezier curves, and fits well into the graphics pipeline.

Here is the image converted to signed distance field (it looks fuzzy because each pixel represents distance from edge). Because so much information is encoded per pixel, the resolution of this image can be much smaller (I'm using 512x512).

Advance, Line Height, etc

The font atlas stored for each character this information. The vertex generation code uses it to figure out the screen coordinates of each subsequent character. I have not implemented kerning yet.

Characters, Vertices, Quads, and Waste

We need 2 triangles to make a square. We could use TriangleStrip requiring only four vertices. But there is a problem: we would not be able to render more than one character in the same vertex buffer. So we use TriangleList and use six vertices per character. That's a bit wasteful... but it gets worse.

We have to pass information to the pixel shader: the font to use, the color to use, whether or not to outline, the color of the outline, transparency, and data for subpixel rendering. Now this information is all per-line-of-text, but vertex buffers specify data per-vertex (which is 6 per character). Rather than require an expensive buffer lookup, we duplicate this data to all six vertices, and to each character. Again, wasteful... but it gets worse.

Before it gets worse, it gets a little bit better. Because I worked out a way to pack all this information into 96 bits: 32 bits for screen coordinates (16 bits for each dimension... normally single precision floats would be 64 bits total), 32 for uv coordinates, and 32 for all the other data, packed into bits: 8 for alpha, 2 for font selection, 3 for color selection, 3 for outline color selection, 1 for whether outline is enabled, 8 for subpixel information, and 7 reserved.

We don't want to reallocate this vertex buffer all the time. So we just create one giant buffer (30,000 vertices), and use the front part of it, rebuilding all the vertex data any time any text on the screen changes (rather than doing memory allocation, dealing with holes, having the shader drop vertices where there are holes in the buffer, etc). Again, feels wasteful rebuliding all the time. And we keep it in host visible memory, so it is not optimized for the GPU, because we keep updating it.

You might think this would all be terribly slow. I sure did. Well, all of the UI rendering takes less than 5 microseconds, because it rounds to zero in my performance metrics. So at this point I am not going to bother optimizing it.

The Result

Here is a sample, rendered, screen-capped, and cropped: